Cybersecurity is home to increasingly specialized tools. However, in practice, the data that cybersecurity teams care about and use every day has heavy overlap with other teams. In this post we’ll break down a few use cases to show how the same data can drive multiple cybersecurity functions.

Cybersecurity products, and the industry more generally, is in a period of consolidation. Over the last ten years, companies have sought to advance their cybersecurity posture by adding unique roles, skillsets, and tools: build up your system administration and IT asset staff to secure and catalog the devices in your network, add some network and host observability utilities to make sure you know what you have and can pay close attention to the activity in your network, add a SOC to watch and handle alerts as they come up, pick up a few IR folks to handle the positive alerts and hunt for things, add some pen testing and red team folks to test the controls repeatedly, and so on. While this specific sequence isn’t universal, there is a similar natural growth progression to every company’s cybersecurity org. In response to this demand, the market has provided training and tools, and naturally fallen into a rhythm in identifying unique processes, workflows, and most importantly, techniques, that are tailored to these specializations. This has made for many cybersecurity professionals that are exceptionally skilled in their unique area of expertise.

While this specialization has been productive for creating in-depth capabilities for the most advanced security organizations, it has also created a severe case of siloing across every business, wherein each team is working in isolation at an advanced level, and at best communicating through to other teams through a combination of tickets, messages, emails, and reports. Less mature and smaller security organizations are even more harmed by this as their team members often have to perform a variety of roles that cross conventional job boundaries for larger and more mature teams. This necessary pivoting means that vanishingly few tools will serve all of the roles these teams are trying to fill, and leaves these teams purchasing a tool that is too-specialized for their needs, leading to wasted time and effort because only a small percentage of the purchased capability is necessary for their organization.

In the end, the skill and success of an individual security team relies heavily on institutional knowledge and experience stored in the minds of its individual engineers, and implemented through a combination of personal note-taking and excel spreadsheets. Functionally this means numerous man-hours wasted in repeated effort, lost context, reading, and documentation. In the end, is this necessary? Are your teams using disparate tools? Do they need them? How do you measure, improve, and manage the performance of your cybersecurity organization? The majority of these teams follow much the same workflow in a general sense, and then end up working off the data that the previous team or process identified, adding to it, aggregating and analyzing it, and then passing it on for the next team, for monitoring or actioning.

Security Workflows (In General)

While a PSIRT team and a SOC might often seem miles apart, their workflows at their core have the same foundational steps. This is generally true across most roles in security organizations. In the interest of looking at the similarities in data and process between different security teams, we’ve intentionally abstracted the stages of these workflows, to make the process clearer and provide a lexicon for their articulation. We’ve separated these steps out as such:

Originating Event

There is always a beginning to the processes in security. Usually, this is an initial tidbit of information that will require tracking, analysis, and handling. An originating event is your team’s call to action: something to remediate, hunt for, or otherwise resolve. What counts as resolved will vary across teams (we patched the vulnerability, we published a report, we did a signature sweep, etc.) but the investigative and resolution process is usually some combination of assessing applicability to your organization, cross referencing against your assets, prioritizing, and then adding/removing/changing something in a software or systems deployment.

While there are many varieties of originating event, they serve the same function: provide the relevant information to action on in a clear, concise manner, and provide an anchor by which a process can be tethered for tracking.

This can be something as limited as an alert to assess, or as nebulous as a new policy document or threat intelligence report published with many potential implications for a given enterprise network. These originating events could be identified and tracked as part of a larger queue, doled out by leadership within the organization, or passed as a requirement from one team to another, often in email or direct messaging. What happens to these within the organization as they’re being actioned? Can other teams work together on a single event in tandem? Can the next team see what the prior did or found with the event? Are they archivally retained for searching and revisiting? Often the answer is no, leading to duplicative effort, confusion, and alert fatigue as analysts and engineers regularly encounter and deal with the same events, and have to keep revisiting and discussing a line of effort with others.

Data Pivoting

With a process engaged and the initial spurring event documented, the individual or team actioning the event will need to scour the available resources at their disposal for additional information that will help them to remediate or resolve the situation. Many individual practitioners will have created their own mental model for handling their areas of responsibility. Which systems can and should be queried can vary from organization to organization but fundamentally the goal is to pivot off the originating event to any additional information available contextualize the originating event to the business.

Practically, this means identifying the impact of the originating event on systems within your enterprise landscape: identifying hosts, network traffic, managed assets, logs, forensics data, deployed software, SBOMs, or other information with which you can place the originating event’s potential effects within the network. In so doing, the originating event can be determined for its prioritization and significance. It could be that the event and associated actions in questions can be deprioritized or ignored entirely if the business is not put at any significant risk. Almost every team is overwhelmed with the amount of actions that can and should be taken to secure their systems, as cybersecurity is a continuous growth process, so this stage is also used to determine the order of importance as well, stacking the many tasks required of the team in a manageable queue for handling.

In order to have sufficient evidence and understanding to prove your remediations, defenses, policies, and response is working as intended, the team needs to be tracking and making connections amongst the aggregated data in order to justify and illustrate the resolutions of their efforts. At this point in the process, connections between old and new data are established, multiple data sources are combined by applying confidence levels (at least mentally) to determine exactly how and to what extent the originating event will affect the organization’s systems and processes. Ideally at this stage of the process, analysts and engineers are determining the remediation or mitigation steps, documenting relationships between data, and retaining this information within knowledge stores for future reference and usage in later instances of workflow engagement. If the team is responsible for maintaining their own internal processes, unique pivoting techniques and strategies for gathering new information should be documented at this point in the process. This retention of documentation can then be used by other analysts who are not as familiar with the techniques used, or for training new personnel in the steps associated with the workflow. This pipeline maturity is in part what separates the most capable and resolute security organizations from the rest; they know exactly what steps and tools can enable them to swiftly and effectively remediate originating events, in a repeatable way.

Resolution and Reporting

All the analysis in the world won’t make any difference if actions aren’t taken to improve operational posture based on analysis performed during the workflow. If the team handling the process is also tasked and resourced to action the information gathered throughout their workflow, then at this point the team can move to tweak their systems to catch threats, patch systems to defend/block/notify, or alter guidance. However, if another team is responsible for actioning the results of an investigation, then it is crucial that the findings, recommendations, and rationale are provided to that team in an easily understandable and actionable way.

Once all the data pertaining to the originating event, actions to take, and contextualization to the business has come together into a cohesive picture, this information needs to be shared to the rest of the security organization of the business. Even if the team doing the analysis has moved to remediate any immediate threats to the business, their efforts can and should be shared with other stakeholders to either engage their own efforts or just maintain cognizance of efforts being made. These reports should make a meaningful effort to describe the who, what, when, where, and why of a line of effort, and ideally follow some amount of standardization or style guidelines to make them as easily digestible as possible. If the reporting needs to be consumed by any other teams for their own processes, then it should make a point to tailor to the needs of those various teams. Having as much information as possible available to those teams to handle their own workflows removes duplication of effort and enables the entire enterprise to work as a cohesive whole. Often this means having those separate teams read the reporting and tickets created by another team and having to reverse engineer the analysis that put it on their plate, and the final reporting and handoff lacks some of the crucial context and data to enable the next stage of the process.

The Data Divide

While each team will have components unique to their processes, workflows, and playbooks, all of them invariably end up relying on the steps above generally, and the data aggregation and analysis is the element that Outcome Security feels to be most unifying. Every single role and workflow in cyber security will have some data that overlaps with another. Keeping each team informed and aligned with overall efforts in an organization increases the effectiveness and efficiency of all of them. If these data components are unified so that everyone is working off the same information, company security teams can be armed to make clearer decisions in prioritization and focus, delivering more tangible security impacts to the business, and maintaining better awareness over time of the context that led to those decisions.

As an example case study in this, let’s look at just one data type within cyber security that is fundamental to each role in different ways, to illustrate how this works in practice. Specifically, let’s talk about vulnerabilities and exploits.

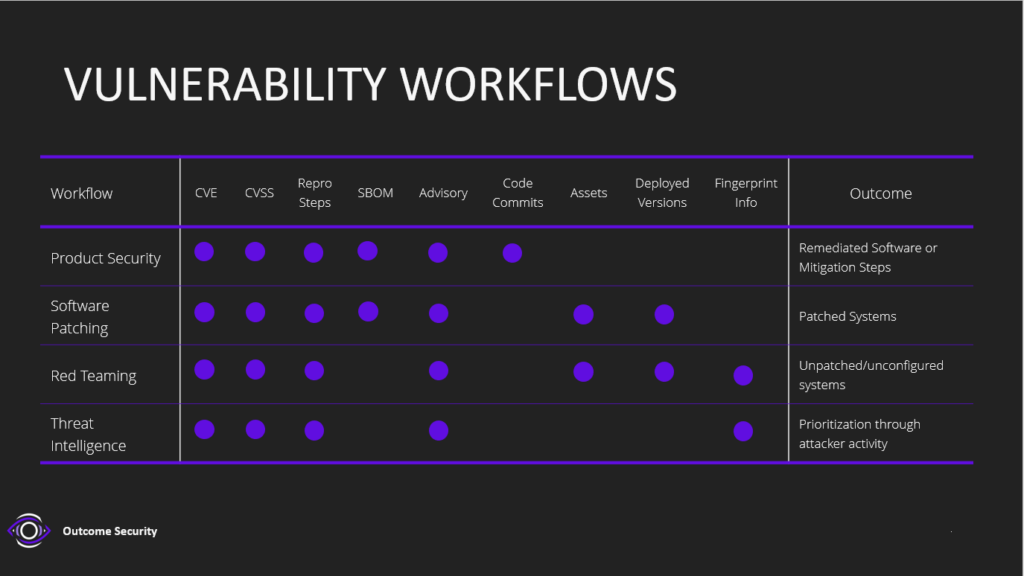

The below table helps to articulate a few separate workflow categories, how their efforts affect the greater lifecycle, and the tracked data at each stage.

| Workflow | Outcome | Tracked Data |

| Product Security | Remediated Software or mitigations steps | CVE, CVSS, Repro steps, affected versioning, SBOMs, code commits, advisory release |

| Software Patching | Patched systems | Assets, Deployed Software versions, CVE, CVSS, Repro steps, affected versioning, advisory release |

| Red Teaming | Identified hosts that remain unpatched | Assets, Deployed Software versions, CVE, CVSS, Repro steps, affected versioning, advisory release |

| Threat Intelligence | Identified attacker exploitation and thus priority of remediations | CVE, CVSS, Repro steps, affected versioning, advisory release, fingerprinting |

Product Security

All software has bugs, and some of them are of course exploitable and create deficiencies for the companies and systems they’re running on. In the interest of continuing to track and remediate these vulnerabilities, the industry has set up standards to track and handle this process end to end, though there are deficiencies in that process.

Product Security teams work with vulnerability data primarily to update or replace affected software components in the product they’re responsible for. When working with vulnerabilities, the data relevant to these teams a includes descriptive information about the vulnerability itself (vulnerable code case, logic flaw, etc.), the software and/or hardware affected, the affected version, any patched version (or mitigations), and references that further articulate it. This vulnerability information might also include any potential exploit PoC or reproduction steps, as well as information that can be used to fingerprint its presence.

As part of the product security workflow:

- A vulnerability is identified in a piece of software.

- A CVE ID is registered in association with the vulnerability. At this stage we should have reproduction steps, and potentially a proof-of-concept exploit.

- The CVE is reported to owners of the software and, when reproducible, passed to their development team to action it within their code base. The information passed to them should include the reproduction steps, and if possible, a point of focus from the code base.

- A fix is tested against repro steps in various software configurations to determine the fix is valid.

- A patch or build is released, alongside a public advisory or patch notes with some indication of remediation of the vulnerability.

- CVE is updated to reflect patched version of the software, mitigating the vulnerability.

Software Patching

After a Product Security team completes their remediations, a public advisory is used to inform customers that they will need to mitigate the vulnerability. It’s up to those businesses (or other code bases) using the software to remediate the vulnerability within their own networks by patching systems. This means consuming at least the public advisory and the patched software version from the product security workflow at a bare minimum, as well as adding additional data to contextualize the vulnerability’s presence within their network. How many systems contain the affected software or hardware? Which ones? Are they serving crucial business functions and/or is it even possible to patch them without disrupting business?

This requires yet again engaging a separate process of gathering information about the hosts within the network, what software is deployed to them, and what the risk and level of “mission criticality” they serve looks like. This information will be tracked and informed by the efforts of the risk and asset management teams (or system administrators, depending on the maturity of the business).

When this information is aggregated and identified by the team deploying patches, they have to make a risk assessment based on this and the relative severity of the CVE to try and determine/justify the prioritization of the patch. Assuming they can justify any potential system downtime, they will then proceed to use any update steps associated with the release that are tracked in the CVE and documentation from the software.

Red Teaming

In the process of testing security mechanisms, all of the capabilities at the disposal of threat actors can be exercised. Because the goal is to track and remediate any deficiencies in process and tooling in the targeted network, all malicious actions must be tracked and maintained in order to deliver this information through to the Blue Team post-exercise.

As part of the red-teaming process, red teamers will seek to identify exploitable software by fingerprinting the deployed software within the network, identifying its version, and finding or implementing an exploit for the vulnerable versions present. Of particular note is that if software is up-to-date but there are misconfiguration vulnerabilities, these can be revealed for the first time through these mechanisms. This fact can and should be tracked as a component of the vulnerability to assist the organization in patching the vulnerability. In principle how a Red Team consumes and uses vulnerability information is almost exactly the same as the team that patches vulnerabilities: both workflows collect installed software/hardware versions from an asset or assets and cross references those versions with versions containing known vulnerabilities. The only difference, in this case, is that the patching team identifies hosts that need to be patched, and the Red Team identifies hosts that should have been patched.

Threat Intelligence

Businesses inevitably have a glut of mission critical systems utilizing software that may contain these vulnerabilities but will have to justify the opportunity cost of the patching and remediation associated. If an organization is mature enough to have in-house CTI capabilities or consume threat intelligence from a provider, this can also play a crucial role in the vulnerability management lifecycle by identifying priorities for remediation. When a vulnerability is identified, resources like CISA’s Known exploited Vulnerabilities database and a variety of telemetry gathering sources will seek to find threat actors using exploits against it.

In order to help organizations effectively prioritize, threat intelligence sources need to be tracked alongside vulnerabilities to provide crucial context in what capacity an industry vertical or region might be affected by the given vulnerability and could make the difference in justifying the remediation process and any potential concerns associated with business continuity. The impact of taking down a business-critical system for patching is (should be) more justifiable if the vulnerability being patched is actively being used by malicious actors.

As part of the prioritization and publication process threat intelligence analysts should seek to verify in what campaigns and targeting criteria threat actors are using a newly identified vulnerability, and what level of exposure that vulnerability can create in practice based on how it’s being used. As an excellent example of this, the recent openssl vulnerabilities (CVE-2022-3786 and CVE-2022-3602) both have a severity rating of “high” and were originally noted as being of the same scope and scale of potential abuse as the notorious “Heartbleed” vulnerability from 2014. Based on the efforts and data identified as part of the original remediation and CVE, this vulnerability was set to make significant waves over vast swaths of the internet and many security teams stood at the ready to patch it in their systems. Analysis immediately after their release has generally found them to be unlikely to be exploited due to the limitations of the vulnerability, and while it is too early to say that threat actors won’t abuse them, numerous infosec professionals have determined them to not be of significant priority to justify their immediate remediation, particularly as it affects only a subset of openssl versions that are currently deployed in the wild. This additional context encouraged a deprioritization of the remediation of these vulnerabilities for the majority of security organizations, saving administrator time, and potential business impacts resulting from system downtime. All that said, threat intelligence is a continuous process; if analysts were to find these being regularly exploited at a later time, they have the potential to create significant impact, and threat actor usage can and should adjust that assessed priority.

The Result

Even though all these workflows characteristically fall under the purview of separate teams within mature security organizations, they all describe one single finite piece of data (“a vulnerability”) which can and should be tracked as a contiguous whole throughout the process. These processes have been written above to be essentially sequential or distinct, as often businesses handle them as such. However, substantial improvements can be made to the health and efficiency of a business by tracking all of these efforts simultaneously. By retaining cognizance of these unifying factors, and tracking them as part of a contiguous dataflow and workflow, these teams can remediate vulnerabilities faster, prioritize their remediation effectively, and continue to monitor them across their enterprise. The graphic below summarizes the teams and data components discussed above.

If product security teams determine reproduction steps for a particular vulnerability are complicated and require sophistication to use, or inversely if the vulnerability was exposed because it was already being used in the wild, vulnerability management teams need this context as soon as a patch is released to be ready, justified, and armed to patch on the day a new version of the software is released. If the threat intelligence team can say that malicious actors using this vulnerability are targeting a different industry, geographical location, etc., the business may be able to instead focus efforts on more crucial efforts. If the vulnerability management team knows ahead of time that the scary new vulnerability that’s being discussed in hushed tones doesn’t affect software that’s currently in their network, threat intelligence teams can instead fixate on those attacks that will target them.

Focus on the outcome, not the data divide.